Deadlock in the wild

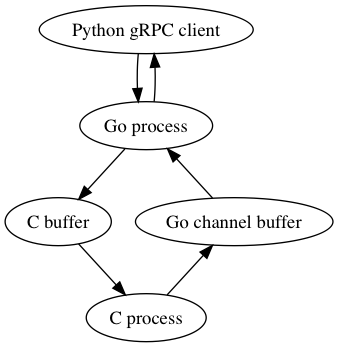

I ran into real world deadlock problem at work a little while back which was pretty cool. At a high level, the system looks like this:

The go process is a gRPC server with a go routine for handling the incoming requests from clients and another go routine for receiving notifications from the go channel buffer. It is more or less a shim layer between the C process and various gRPC clients. When a request comes in, it transforms that request into a language the C process can understand and queues it in a buffer in the C process (it does this using cgo). Now the request can also be for a subscription, in which case the client is indicating that it would like to be notified for specific state changes in the C process. The C process is single threaded and it will block if the go channel buffer is full.

We noticed that the go process would exit intermittently when the system was strained with a scaled configuration. The stack trace indicated that the go process had queued a request in the C buffer but hadn’t received any response in some outrageously long period of time (1 hour), so it classified this situation as an unknown timeout error and exited.

The code for the gRPC clients was much newer than the go process, so we decided to investigate there first. It seemed like one explanation for the behaviour was that the client could send a request for a large amount of data, then read it extremely slowly or not at all and simulateously send another request. If the data requested is large enough to fill the go channel buffer, then, as mentioned above, the C process will block until the client reads some of that data. During this time window, if another request is sent by the client, the go process will enqueue that request in the C buffer and start the timer waiting for a response from the C process. Since the C process is still blocked waiting for the client to read some data, it fails to handle this additional request so the timer expires and the go process exits. We inspected the client side code for any dependencies between the send/receive threads which would cause this failure to read data, but we found no issues.

The issue was in the go process. Even though there are two separate go routines for sending and receving information to and from the C process, the send go routine was actually just passing the request to the receive/main go routine which was doing the actual call to enqueue the request to the C buffer. This means that the receive call to get information from the go channel buffer and the send call to enqueue requests in the C buffer were actually happening in the same thread. Since the C process is also single-threaded, deadlock can occur. Here’s how it happens:

- The gRPC client sends a request for a huge amount of data

- The go process enqueues the request in the C buffer and starts a timer waiting for a response from the C process

- The single-threaded C process fills up the go channel buffer with its response and blocks waiting for the data to be read

- In the meantime, any request sent by the client will cause deadlock

The deadlock occurs between the C process and the go process. Let’s call the go process A and the C process B. A is waiting for B to service this new request. B can’t service this new request because it is waiting for A to read some data from the go channel buffer and send it to the client. However, A can’t read the data from the go channel buffer because it is waiting for B to service the new request. So there you have it - watch out for sneaky hidden deadlock if you have a bunch of communicating processes!